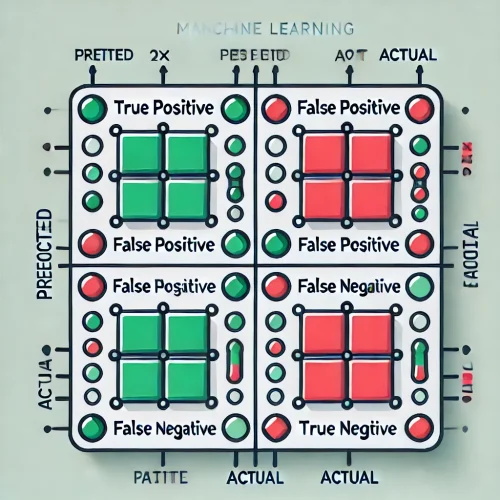

Confusion Matrix

The confusion matrix is a performance evaluation tool used in classification problems to measure the accuracy of a classification model by comparing predicted and actual class labels. It provides a tabular summary of how well a model’s predictions align with the actual outcomes. The confusion matrix summarizes how successful the model is at predicting the different classes of a data set sample, with each axis representing the predicted and actual labels. This metric lets us easily compare how many examples from a specific class were correctly predicted or mislabeled.

Structure of a Confusion Matrix

For a binary classification problem, the confusion matrix is a

table with the following entries:

| Actual \ Predicted | Positive | Negative |

|---|---|---|

| Positive | True Positive (TP) | False Negative (FN) |

| Negative | False Positive (FP) | True Negative (TN) |

- True Positive (TP): Cases where the model correctly predicts the positive class.

- True Negative (TN): Cases where the model correctly predicts the negative class.

- False Positive (FP): Cases where the model incorrectly predicts the positive class for a negative instance (Type I error).

- False Negative (FN): Cases where the model incorrectly predicts the negative class for a positive instance (Type II error).

On the basis of above metrics, other parametric values are calculated like Precision, Recall, f1-score which are also important measures to check the suitability of a prediction model in addition to accuracy. Confusion matrix, precision, recall, and f1 score provide better insights into the prediction as compared to accuracy performance metrics.

Precision

It depicts “Out of all the positive predicted, what percentage is truly positive”. The precision value lies between 0 and 1. A good prediction model provides a very large number of true positive (TP) values and a very low number of true negative values. Now, based on the TP and FP values, we define Precision. It is the ratio of the TP values to the sum of TP and FP values, i.e.,

Precision=TP/(TP + FP)

Recall/Sensitivity

Based on the TP and FN values, we define another parameter called recall. It is the ratio of the TP values to the sum of TP and FN values, i.e., it depicts that out of the total positive, what percentage are predicted positive.

Recall=TP/ (TP+FN)

Imagine if the prediction model labels every star as 2, i.e., every star has a planet. Then, the number of TP values will be the maximum, i.e., 5 but the number of FP values will also be maximum, i.e., 565. In such a case, the precision value would be Precision = 5/(5+565) =0.008 which is a very low value. Also, the model will give 0 FN values. Then, the recall value would be Recall =TP/(TP + FN)=1.

There are some problems where high recall is more critical than high precision and vice versa. For example, consider that we are trying to determine the toxicity of a substance (toxic being the positive class and non-toxic the negative class). In this context, a high recall is critical because it means that from all possible toxic sub- stances, most of them were correctly labelled as so.

Accuracy

Another traditional metric employed in classification model assessment is accuracy. Accuracy is the number of correctly classified examples over the total number of predictions, defined as:

accuracy =(TP + TN)/(TP+FP+ TN + FN)

Accuracy is especially valuable when errors in predicting all classes are equally important.

What is ROC?

Finally, the ROC curve is a graph that allows us to assess the performance of a classification model at various classification thresholds. Thus, this metric can only be used with classifiers that output some confidence score or probability measure, such as logistic regression or neural networks.

The ROC curve uses two measures: the true positive rate (TPR), which is precisely the same as the recall, and the false positive rate (FPR), which is defined as FP/(FP+ TN). The graph is created by plotting the TPR against the FPR at the predefined thresholds. Lowering the classification threshold classifies more items as positive, thus increasing TPR and FPR. Conversely, raising the threshold will make TPR and FPR tend to zero.

The area under the ROC curve (AUC) measures the two-dimensional area underneath the ROC curve. It is an aggregate measure that summarises the ROC curve. The higher the AUC, the better the classifier is. A random classifier has AUC equal to 0.5. An AUC below 0.5 typically indicates something is wrong with the model, data labels or choice of train/test data set.