What is DALL-E

DALL-E (pronounced “Dolly”) is a series of artificial intelligence models developed by OpenAI that can generate original digital images from natural language descriptions, known as “prompts.”

The name is a blend of “Dali” (after Salvador Dalí) and “WALL-E.” The models use advanced neural networks, building on GPT architectures, to create detailed, often creative images based on user descriptions.

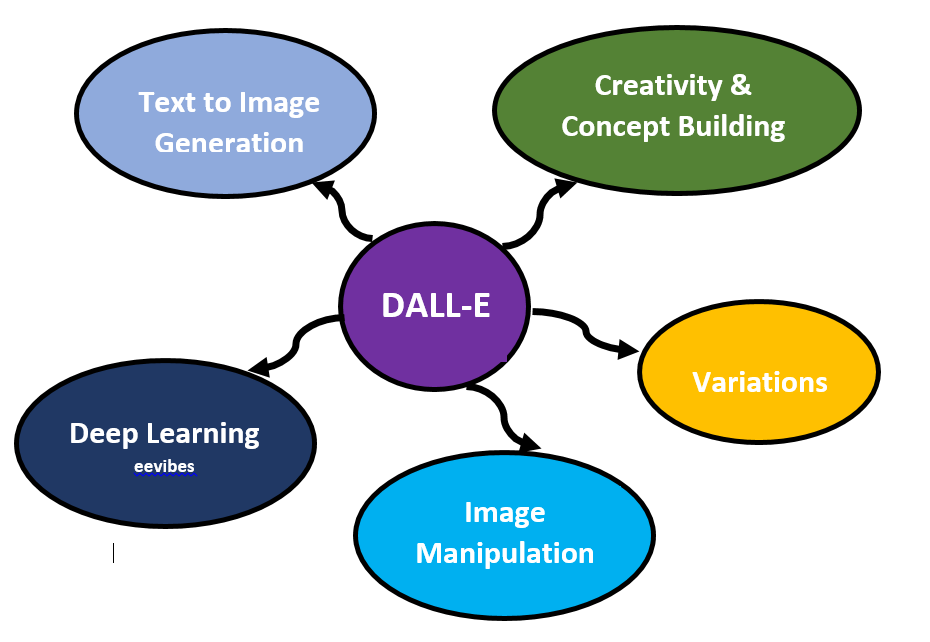

Key Features of DALL-E

Here’s a breakdown of what that means and its key capabilities:

- Text-to-Image Generation: The core function of DALL-E is to take a written description (e.g., “a surrealist painting of an elephant wearing a top hat riding a bicycle”) and create an image that matches that description.

- Deep Learning: DALL-E uses deep learning methodologies, specifically transformer neural networks (similar to those used in large language models like GPT-3 and GPT-4o), to understand the relationship between text and visual concepts. It’s trained on a massive dataset of image-text pairs.

- Creativity and Concept Blending: DALL-E is known for its ability to combine unrelated concepts in plausible and often artistic ways, generate anthropomorphized objects, and apply various styles (photorealistic, painting, emoji, etc.).

- Image Manipulation (Inpainting and Outpainting):

- Inpainting: DALL-E can edit existing images by adding or removing objects, taking into account shadows, reflections, and textures to seamlessly integrate the changes.

- Outpainting: It can also extend an image beyond its original borders, generating new visual elements that match the style and content of the existing image.

- Variations: DALL-E can generate multiple variations of an image based on an original image or a text prompt, offering users more creative options.

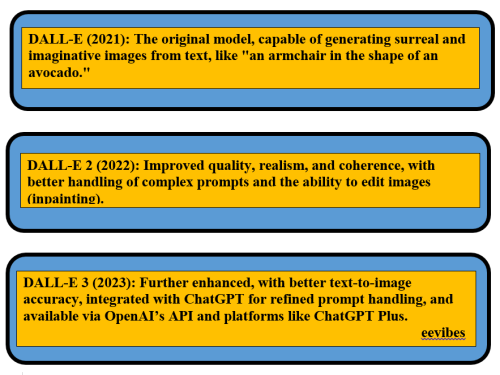

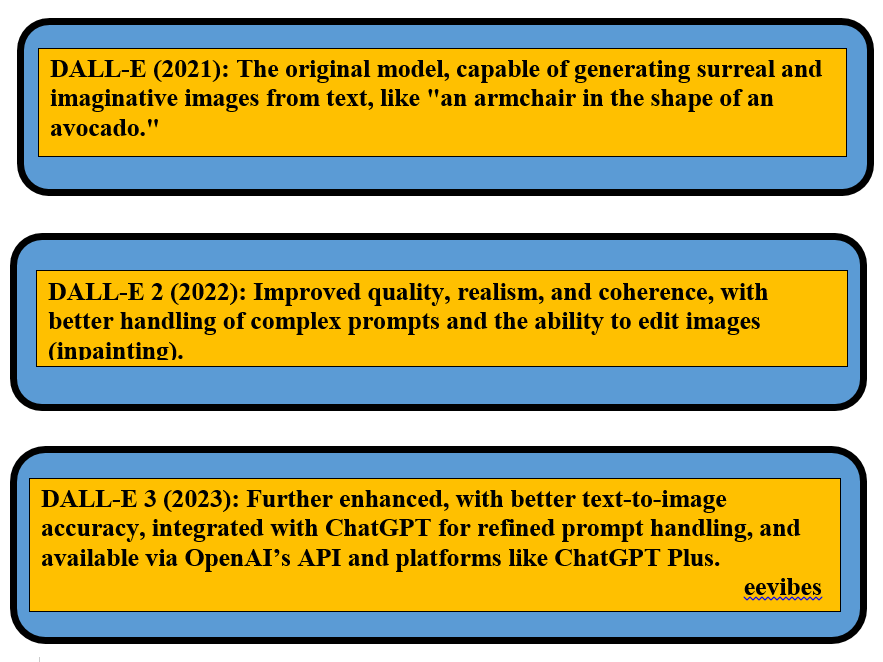

Evolution of DALL-E

DALL-E has evolved through several versions (DALL-E 1, DALL-E 2, and DALL-E 3), with each iteration offering improved image quality, realism, and a better understanding of complex prompts. DALL-E 3, in particular, is noted for its enhanced precision and ability to follow prompts with more nuance and detail. Here is the detailed description of each type.

DALL-E 1 (Released January 2021)

- Initial Breakthrough: DALL-E 1 was a groundbreaking moment for generative AI. It demonstrated the ability to create novel images from text descriptions, a feat that was highly impressive at the time.

- Architecture: It was a 12-billion parameter version of GPT-3 trained to generate images from text descriptions using a dataset of text-image pairs. It used a discrete Variational Autoencoder (VAE) to convert images into token sequences and an autoregressive Transformer (like GPT-3) to process both text and image tokens.

- Capabilities:

- Could create anthropomorphized versions of animals and objects.

- Combined unrelated concepts in plausible ways (e.g., “an avocado armchair”).

- Could render text (though often imperfectly) and apply transformations to existing images.

- Showed some control over attributes and object positioning, but success rate decreased with more complexity.

- Limitations:

- Lower resolution images (256×256 pixels).

- Struggled with maintaining consistency and realism in complex scenes.

- Could be brittle with rephrasing prompts, leading to inconsistent interpretations.

- Found difficulty in generating photorealistic images.

DALL-E 2 (Released April 2022)

- Significant Leap Forward: DALL-E 2 brought substantial improvements in realism, resolution, and understanding of complex prompts, making AI image generation much more practical and visually impressive.

- Architectural Shift: Unlike DALL-E 1’s autoregressive Transformer, DALL-E 2 utilized a diffusion model conditioned on CLIP (Contrastive Language-Image Pre-training) image embeddings. This architecture allowed for more realistic and higher-quality image generation.

- Key Features and Improvements:

- Enhanced Resolution and Image Quality: Generated images at 4x greater resolution (1024×1024 pixels) with finer details and significantly improved photorealism.

- Improved Compositional Understanding: Better at handling complex prompts involving multiple objects, attributes, and their spatial relationships. It could distinguish nuances like “a yellow book and a red vase” from “a red book and a yellow vase” more effectively (though still not perfect).

- Inpainting (Editing): Introduced the ability to make realistic, targeted, and context-aware edits to existing images (generated or uploaded) using natural language descriptions. This included adding or removing elements while accounting for shadows, reflections, and textures.

- Outpainting (Extension): Allowed users to extend images beyond their original borders, generating new visual elements that seamlessly matched the existing style and content.

- Variations: Could take an image and create different variations inspired by the original.

- Access: Initially launched as a research project with limited access, it later became available in beta to a wider audience (July 2022) and eventually removed the waiting list (September 2022). An API was also released (November 2022) for developers.

- Safety Focus: OpenAI implemented safety measures to prevent the generation of harmful content (violent, hate, adult images) and photorealistic generations of real individuals’ faces.

DALL-E 3 (Announced September 2023, Public release October 2023)

- Deep Integration with ChatGPT: A major advancement of DALL-E 3 is its native integration with ChatGPT. This allows users to leverage ChatGPT as a “brainstorming partner” to refine and expand their prompts, leading to more precise and nuanced image generations without requiring extensive “prompt engineering” skills.

- Enhanced Prompt Following: DALL-E 3 is significantly better at understanding complex, longer, and more detailed prompts, capturing nuance and specifics that previous versions might have missed. It addresses issues like properly rendering text within images and handling intricate details of hands, which were common challenges for earlier models.

- Improved Accuracy and Detail: Continues to push the boundaries of image quality, producing even more lifelike and visually compelling images.

- Safety and Ethical AI: DALL-E 3 includes more robust safety protocols. It actively declines to generate images of copyrighted characters, content, or logos, and avoids replicating the signature style of living artists. It also reinforces restrictions on generating inappropriate, violent, or misleading content. OpenAI also introduced research into provenance classifiers to help identify AI-generated images.

- Accessibility: DALL-E 3 is accessible through ChatGPT Plus subscriptions and is also integrated into Microsoft’s Bing Image Creator and Copilot.

In essence, the evolution of DALL-E reflects a progression from a novel proof-of-concept (DALL-E 1) to a highly sophisticated and user-friendly image generation tool (DALL-E 3), continually improving in its ability to translate human language into compelling visual art.