A Humanoid Robot Broadcast Control System

A Humanoid Robot Broadcast Control System Using Wireless Marionette Style. When a speaker transmits a message to a listener, it is assumed that the message will be relayed more accurately if a suitable gesture is attached to the message. Whether the speaker and listener are close or far apart, the theory is true. However, a real-time communication system using video pictures is required for a speaker to transmit messages and gestures to a remote listener synchronously. As a result, we suggest a mechanism for displaying synchronized motions with gestures at cheap cost when sending messages to remote listeners in this study. To do this, we devise a way for moving the robot closer to the speaker rather than relying on a video shot to transmit the speaker’s gesture. The motion data of the speaker’s hand is acquired using a motion sensor, the data is processed, converted into information for moving the motor corresponding to the robot’s joint, and the information is transmitted to the distant robot, which moves with motion according to the speaker’s purpose. To implement the proposed strategy, we created a mechanism that converts the speaker’s hand gesture into real-time robot motion data and allows the robot to be controlled from a distance. We implement a function in our system that allows us to send high-priority information, such as disaster information, to robots in each household all at once. We created a prototype of an information display system based on these specifications. We expect the speaker’s message to properly communicate to the various listeners utilizing this technique.

Introduction to A Humanoid Robot Broadcast Control System

Robot technology has been fast evolving recently. It is to improve people’s lives through the use of robots in society. For instance, security robots that defend public safety and nursing robots that assist patients. To put these robots into practical use in society, they must be extremely safe.

We discovered that when sending a message to a person using a robot, it is best to deliver the robot’s movement simultaneously with the speech message. Continuing to maneuver the remote robot while the speaker speaks, on the other hand, is a difficult challenge. A device that allows the speaker to control the robot with a simple gesture is necessary for a robot to deliver a motion simultaneously with a word to a listener at a remote location. Also required is a technology that allows a robot in a remote location to move without delay in response to the speaker’s manipulation.

We define a robot motion design scheme in this study, which includes numerous combinations of different sensor technologies and robots. Then, for a given situation among the combinations, we develop an implementation method. The speaker can communicate the message and motion to the remote listener through the robot with a simple operation using the remote message transmission technique using the mechanism provided here. We anticipate that the use of distant language communication, nonverbal communication, and communication robots will boost listeners’ acceptance of communications. Our goal is to create a method for designing robot motion that is as independent of specific devices and technology as possible.

RELATED WORK

The humanoid robot in this study expects the speaker to act as an interface for correctly transmitting what he wants to say to the listener. Information transmission by robot has been studied in a variety of ways. According to Matsui et al., a humanoid robot has an advantage in mapping human movements to robot motions. Patsadu et al. suggested a method for detecting a person’s entire body gesture by learning the joint shape of the person captured by the light coding system’s depth camera. Appropriately developing and producing motion data so that robots behave like humans is a difficult undertaking. The most promising way is to use human actions exactly as they are and translate them to a set of directives for controlling robots.

They’ve utilized humanoid robots as interfaces for delivering messages to humans in the past. AIDA (Affective Intelligent Driving Agent) robot designed by Williams and Breazeal provides a message to encourage safe driving. They mounted it on the car’s dashboard and conducted tests to demonstrate that sending messages through robot is effective.

There have been numerous studies in the field of Telepresence, Tel-existence, and information presentation to a remote party. Telepresence is a concept that refers to a technology that gives users the impression of being on site with members in remote locations.

SYSTEM ORGANIZATION

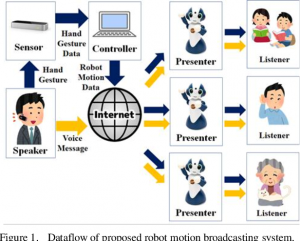

The suggested system measures the speaker’s gesture in real time, translates it to robot motion, communicates the motion data, and drives the robot from a distance. One speaker can control numerous robots simultaneously in the proposed approach. The setup is shown in picture above

There are five objects in this system. Presenter, Listener, Speaker, Sensor, Controller For the listener, the speaker simultaneously performs a spoken message and a hand gesture to operate the robot. The speaker’s hand gesture is captured by the sensor and converted to data. Hand gesture data is converted into robot motion data and transmitted through the internet via the controller. The presenter accepts robot motion data as well as a speech message and synchronizes the motion with the message. The robot transmits information to the listener.

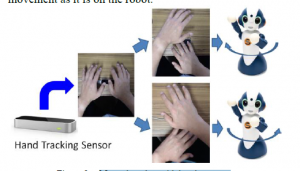

The second picture shows how to control the robot using the operator’s two hands. A sensor that examines the movement of the operator’s hand is employed as a sensor that acquires the motion of both hands. This sensor monitors the operator’s palm and fingers movement in real time. The robot’s movement is generated in response to the operator’s movement based on the detection result. In general, as a means of leveraging the speaker’s gesture for the robot’s motion, consider a method in which the speaker moves his or her full body and imitates the movement on the robot.

We chose not to employ the way of directly using the speaker’s gesture in this study because we wanted to make the robot generate motions consciously rather than move it using gestures that the speaker makes instinctively. Furthermore, to recognize the gesture a high-performance computer and camera equipment are required for the entire body.

As a way of recognizing the speaker’s gesture, we decided to focus just on the speaker’s hands and fingers in this system implementation. As a result, we employ Leap Motion as photography equipment to detect the speaker’s hands and fingers. On the listener side, we use Vstone’s Sota , which serves as a robot in our study. The robot’s body contains eight servo motors. These motors cause free rotation movement at the left shoulder, left elbow, right shoulder, right elbow, base body, and three at the neck, respectively. We can create natural motion like humans by coordinating and moving these motors at the same time.

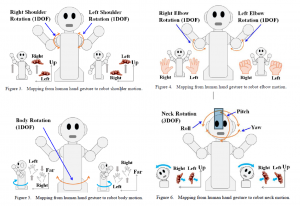

The operator raises and lowers the sensor’s hand in Fig. 3 to move the robot’s left and right shoulders up and down. Similarly, the speaker is created in Fig. 4 by opening and holding the hand on the sensor to bend and extend the robot’s elbow on the left and right sides. The speaker also pulls one of the left and right hands towards you to move the robot’s body, as seen in Fig. 5. Finally, by altering the angle of the palm on the sensor, the speaker can freely move the robot’s neck. There are rules and mathematical formulas for conversion between these gestures and the movement of the robot. The system translates the collected hand’s information into the robot’s corresponding motor angle value.

We detect the movement of the speaker’s hands and fingers and produce the motion of the robot based on the result in this study to achieve nonverbal communication in accordance with the message sent by the speaker during the conversation. Processing is required in order to turn a human gesture into a robot movement. The technique for implementing the suggested system is described.

Controller

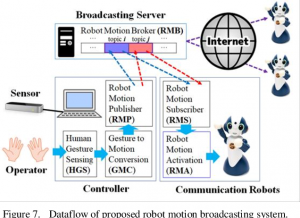

The controller in this system acquires the speaker’s hand gesture, turns the data into robot motion data, and is responsible for transmitting the data.

The Human Gesture Sensing (HGS) module transforms the gesture into data first. This project is made possible by the usage of the Leap Motion SDK as a sensor. Convert gesture data to robot motion data using the gesture to motion conversion (GMC) module. To convert it, you’ll need to construct calculation formulas after researching the range of available gesture data and the operable angle of each robot joint ahead of time. Finally, the Robot Motion Publisher (RMP) module prepares the robot’s motion data for transmission. The MQTT protocol is utilized to transmit data in this system. This protocol allows for lightweight data transfer and reception via the Pub-Sub technique.

Server for Broadcasting

The server acts as a data broker in this system, sending and receiving data between the controller and the robot. Each subject’s data is stored independently in the broker, and data sent by indicating it as the most recent topic from Publisher is rewritten. The subscriber receives the most recent information on the topic. This method of data transmission and reception has the advantage of eliminating the need for synchronisation between the transmission and reception sides. It is also feasible to communicate motion to robots of various shapes simultaneously by breaking the issue into many topics.

Robots that communicate

The communication robot acquires robot motion data from Server using the Robot Motion Subscriber (RMS) module in this system. The Robot Motion Activation (RMA) module uses the acquired data to set the angle value of each motor. By doing so, the robot follows the speaker’s instructions. This time, the robot has a Linux-based controller built in. The RMS module, which receives robot motion data, and the RMA module, which initiates robot motion, are both produced in a programming environment like Eclipse and then transmitted to the built-in controller. Functions such as acquiring a boss message and utterance are already in use.

PREVIOUS EXPERIMENTS

We built a prototype system based on the design described in the preceding section and conducted a preliminary test. The image of the installed system is shown in Figure 7. The control PC’s operating system is Linux (Ubuntu). All modules run in the environment and are written in the Python programming language. The controller PC is connected to the Leap Motion. The PC transforms the hand motion detected by the sensor into the angle value of the robot’s eight motors. We created a function utilizing the free MQTT broker Mosquito to broadcast these values to various robots. All of the robot’s modules are written in Java. To read robot angle information from the Broker, we utilized the Eclipse Paho library.

CONCLUSION

We designed an information presentation system utilizing a robot that displays gestures by the speaker’s operation simultaneously with the speaker’s message in this study. We used the technology to conduct an experiment and found that the robot at the remote site could be controlled freely by the speaker’s gesture. If we utilize a quicker control PC and a robot with an upgraded built-in controller in the future, we can expect even smoother and more natural robot motions. The experiments also taught us something. One of them is that when coupling the motion of a human hand to the motion of a robot, it is required to understand the human and robot motions, as well as their respective motion ranges, and establish the correspondence.

Preliminary investigations in this research revealed that familiarizing the gesture to some level with generating the motion in real time with the speaker’s hand gesture is required. The movement of the robot utilized with the message in our earlier work took the form of turning the human hand’s gesture into motion, modifying the motion data, and calling it.

Also read here

-

Serial communication project using PIC microcontroller

-

Design of smart electronic voting machine using Arduino

-

How to control the speed and direction of DC motor using Arduino?

-

How to Display on 8×8 Dot Matrix LED Using Arduino(UNO)?

-

How to Design RGB Mood Lamp using Arduino?

-

Serial Temperature Sensor Project using Arduino

-

How to design an LED Flasher on Arduino Board

-

Design of Traffic Light Control system using Arduino

-

Arduino Project: How to control the speed of DC motor?

-

Arduino Project: Send Command with Serial Communication

-

Arduino Project: LED Fire Effect